This tutorial will walk through how to capture Rock Paper Scissors gestures inside Virtual Breadboard and upload training and test data sets of gesture data to Edge Impulse for training and classification of gestures.

Edge Impulse is the leading development platform for machine learning on edge devices. Their portal allows you to train machine learning models and deploy those models on stand alone embedded devices.

The Virtual Breadboard Edge Impulse Component can be used to aquire data from virtual or real data sources ( via EDGEY interface) and upload to a specific EdgeImpulse project via their Injest Service.

Create Edge Impulse Project

Refering to edge-impulse-uploader

Create a new Rock Paper Scissors Edge-Impulse project and locate the ingest service API and `HMAC keys needed to upload data.

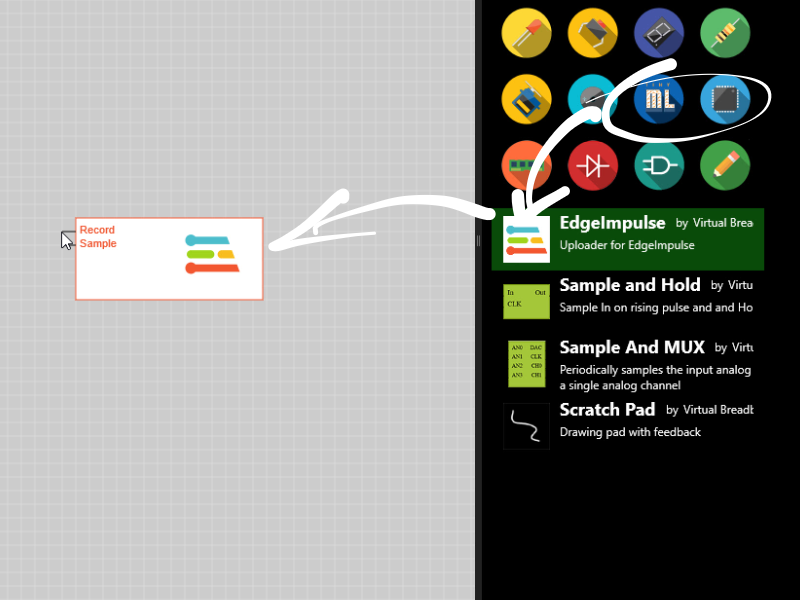

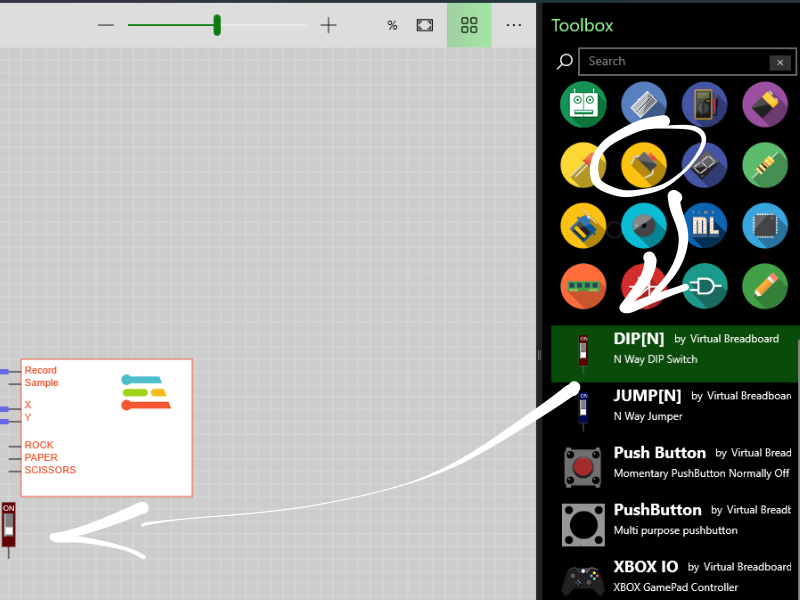

Place EdgeImpulse VBB Component

From the Machine Learning Group place a new VBB Edge Impulse component

- Click the Toolbox ribbon

- From the Tiny ML group click the EdgeImpulse icon to begin placing

- Position and click to finalise the position

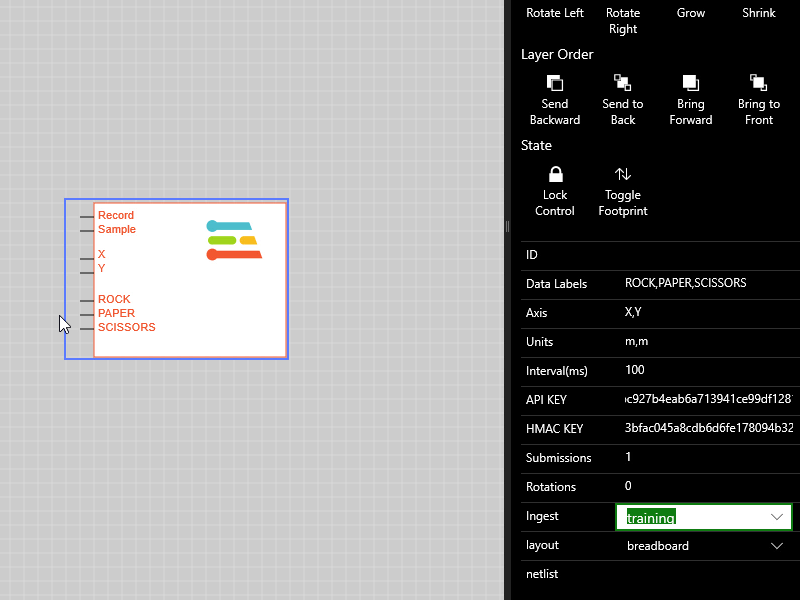

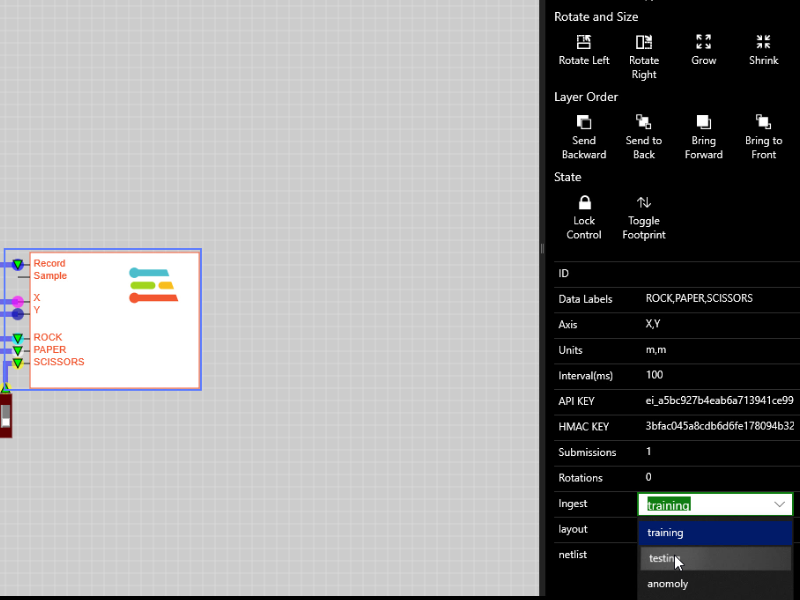

Configure Properties

Click the placed component to show the properties editor and enter the following property values

Property | Value |

Data Labels | ROCK,PAPER,SCISSORS |

Axis | X,Y |

Units | m,m |

Interval(ms) | 100 |

API KEY | Copy and Paste from EdgeImpulse Project |

HMAC KEY | Copy and Paste from EdgeImpulse Project |

Submissions | 1 |

Rotations | 0 |

Ingest | training |

The test circuit consists of a ScratchPad which generates X,Y voltages from 2D drawn gestures and a DIP component which is used to set the labels being gestures being captured.

Components Used

Place Scratch pad

The Scratch Pad Pad component captures mouse or stylus motions into X,Y voltages that can be recorded as gesture motion.

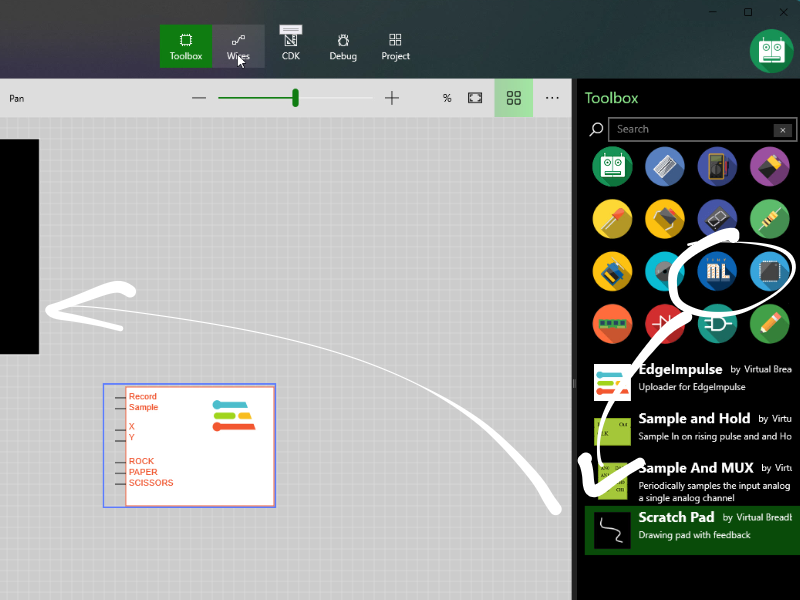

- Click the Toolbox ribbon

- From the Tiny ML group click the ScratchPad icon to begin placing

- Position and click to finalise the position

- Click again to select and properties and configure as

Property | Value |

Mode | record-playback |

Period | 2 |

Place DIPN component

The DIPN component is an IO component that allows you to interactive toggle IO values.

- Click the Toolbox ribbon

- From the Buttons, Dials and Switches group click the DIPN icon to begin placing

- Position and click to finalise the position

- Click again to select and properties and configure as

Property | Value |

Pins | top |

PinCount | 3 |

Wire Test Circuit

- Click the Wires ribbon

- From the Wire Color group click a wire color to start wire mode

- Wire up the circuit as follows

- ScratchPad.X to EdgeImpulse.X

- ScratchPad.Y to EdgeImpulse.Y

- ScratchPad.* to EdgeImpulse.Record

- DIP.1 to EdgeImpulse.ROCK

- DIP.2 to EdgeImpulse.PAPER

- DIP.3 to EdgeImpulse.SCISSORS

Gestures are distinct motions sequences that can be classified by machine learning algoritms. For machine learning to work you need to record several variations of the same gesture to train the learning model to understand what aspects of the gesture are invariant and unique when compared to other gestures.

Rock, Paper, Scissors is a game with 3 gestures. Here we repeatedly draw the gestures on the Scratch Pad and upload the gesture data to the Edge Impulse project for training.

We are using gestures that can be quickly drawn in around 2 seconds. Your Scratch Pad settings Mode should be record-playback with a Period of 2 seconds so the gesture will be first recorded (white) in approximately 2 seconds but then time scaled and played back in exactly 2 seconds to fit a 2000ms capture frame used later in the training model.

Pre-processing the signal to fit and exact time window in this way makes it easier to train and classify the live data downstream.

To start training the

- Press Power On to start training the circuit.

- Optionally Save the project

Train Rock

- Click the DIP.1 => EdgeImpulse.ROCK to make uploads to the ROCK Label

- Repeatly make the ROCK gesture in about 2 seconds.

- After drawing (White) your gesture will be replayed (Red) in a exact 2 second time frame

- While replaying the EdgeImpulse record red dot will appear

- Once the gesture is uploaded the red dot will disappear and you can record the next gesture

- Record 10 or more Rock Gestures

- Click Off the DIP.1 => EdgeImpulse.ROCK when complete.

Train Paper

Repeat the above Train Rock procedure for Paper using DIP.2 => EdgeImpulse.PAPER

Train Scissors

Repeat the above Train Rock procedure for Paper using DIP.3 => EdgeImpulse.SCISSORS

In addition to the training set it is important to record additional gestures as a test set to evaluate the trained models ability to make correct classifications with gestures it has not seen before.

- Power OFF the Circuit

- Change the EdgeImpulse

Ingestproperty to testing - Power ON the circuit

- Repeat the previous step recording 5 or so test gestures for each of ROCK,PAPER,SCISSORS

Now that the data has been collected you can train easily train a TinyML model that can classify the gestures using the Edge Impulse portal.

From the Edge Impulse Project Select the Impulse Design panel

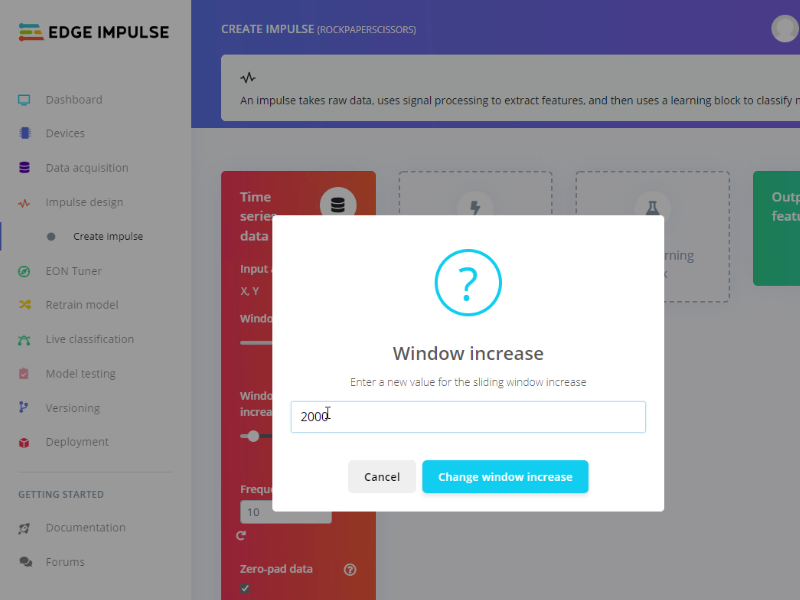

Configure Window Size

Set the FrameSize as 2000ms

Add Processing Block

The processing unit is used to extract features over which to run classification neural network models. The job of the data scientist is to figure out which features best partition the data in a way that features seperate in a recognisable way. In this case the features are very clear and we can just process the raw data.

To add a raw data processing unit:

- Cick the Add a processing block panel

- Press the Add button on the Raw Data processing module option

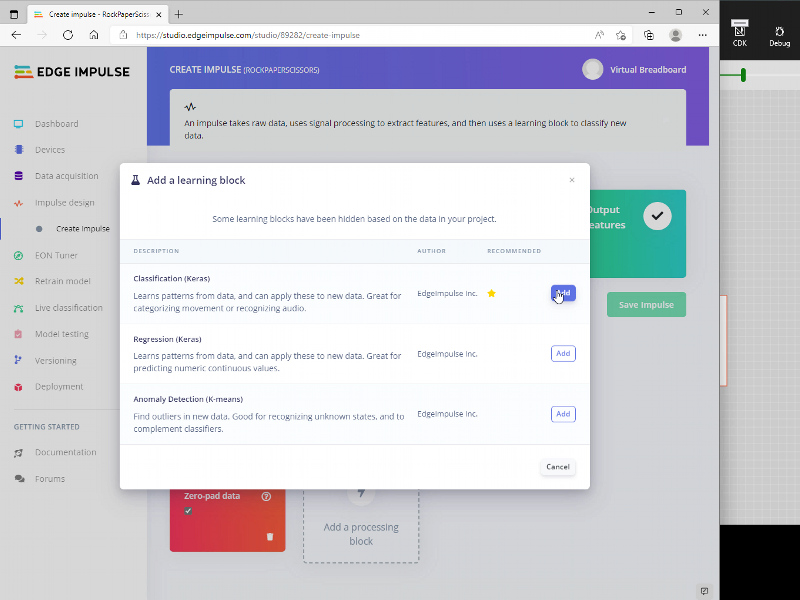

Add Learning Block

The learning block does the job of applying a neural network learning model to the extracted features output from the processing block. When a training set is applied to the learning block it learns to map characteristic data features to specific identification labels. Rock, Paper, Scissors is a classification task so we add a *Classification learning block

To add a learning block processing unit:

- Cick the Add a learning block panel

- Press the Add button on the Classification(Keras) to add the learning block

Save the Impulse

Click the Save Impulse button to save the settings

Now the Impulse is configured the next step is to generate the features and visually inspect the data partitioning generated by the select processing block. The Raw Data in this case. If the data labels are clearly visually seperated the learning model should able to train and accurate classification model.

To generate features :

- From the Raw Data Panel

- Click the (Tompost) Generate features button to open the generate feature panel

- Click the ( Green) Generate features button to compute the features

- Visually Inspect the 3D chart to confirm the seperation of the ROCK,PAPER,SCISSORS features

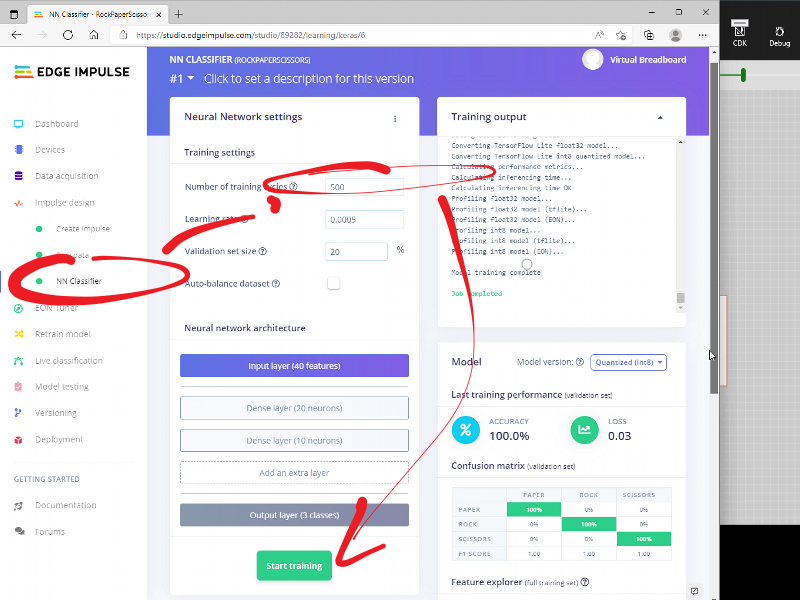

Next we train the neural network which computes the correct neural net weightings based on the train set of data to classify the labeled data

To generate the neural network model :

- From the NN Classifier Panel

- Set the number of training cycles to your prefered value, try 500 to be sure

- Click Start Training

- Verify the Accuracy of the model. It should be 100% for a simple model such as this

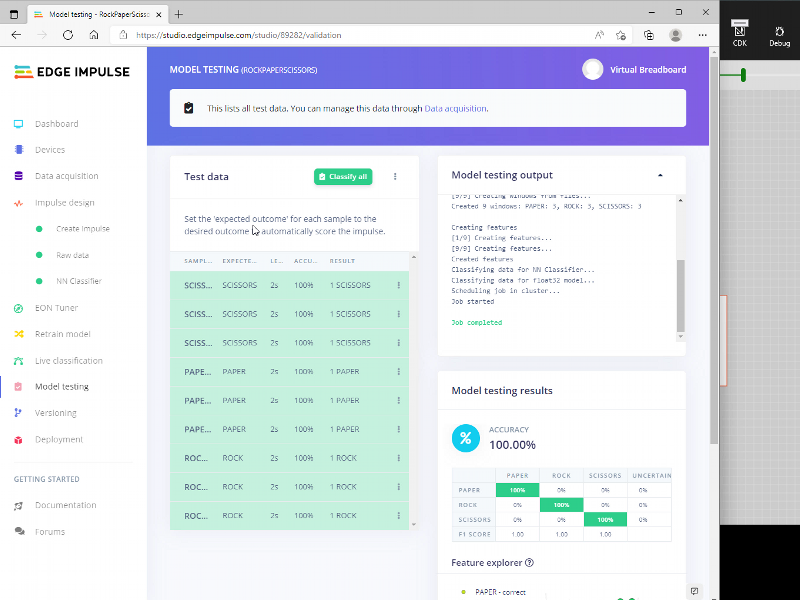

Now the model is trained we can run it again using the test data set for which it has not been trained to verify the neural net is not just comparing to previously seen data

To test the model:

- From the Model Testing Panel

- Click Classify All button

- Verify the data successfully classified the test set. Should be 100%

Congratulations, you have successfully captured Rock, Paper, Scissors gesture data, built a Impulse model, and trained and test a Neural Network model that can accurately classify the 3 gestures all without any programming.

What's next

Go ahead and experiment with different gestures and experiment with different processing blocks and neural network models to learn more about the different trade-off's available to you as a data scientist.

Upcoming Tutorials:

- Deploy the trained neural network model to the Raspberry Pi Pico

- Use EDGEY to transmit 2D gesture information to a Live Raspberry Pi Pico and display the classification in VBB